Update: This blog series has been updated for Ghost 2.x. If you've landed here looking to setup a new Ghost blog, you should follow the updated version.

In Part 1 we setup a Ghost blog running locally in Docker containers, wired together with Docker Compose. In Part 2 we deployed it to a Droplet (VPS) on DigitalOcean.

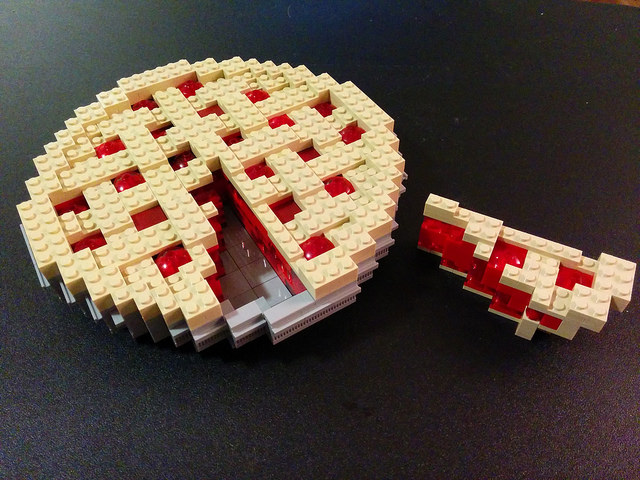

Along the way we've seen how building up our stack using Docker is a little like playing with lego. We join together a bunch of useful, single-purpose, bricks to make something bigger.

We now have a local and remote Ghost environment ready, but we're missing something- a way to keep them in sync; It's time to add the final piece in our lego pie!

While we're at it, we'll also setup automated backups to Dropbox. It probably won't help us in the event of a giant solar storm, but in less extreme scenarios might stop us from losing all those posts, gifs and memes!

This is the 3rd and final part of the series:

- Part 1: Setting up a Dockerised installation of Ghost with MariaDB

- Part 2: Deploying Ghost on DigitalOcean with Docker Compose

- Part 3: Syncing a Dockerised Ghost blog to Digital Ocean with automated backups

Cool, let's finish our setup!

Guide

- Why have a local environment?

- Taking a manual backup

- Adding automated backup with ghost-backup

- Putting our backups in Dropbox

- Restoring a backup

- Manual sync

- Using ghost-sync

- Testing the workflow

- Wrapping Up

Why have a local environment?

Before we continue, you might wonder why are we bothering with the local environment at all? We could use the Compose file we have already to up the stack on our Droplet, write and publish our posts there, and be done.

While that would be a valid approach (and might be all you need), there are some benefits to setting up our local environment:

- We can publish posts locally to check the formatting and how they will look in the wild (Ghost has a great live-preview of Markdown, but it is still not as good as a complete rendering, especially if you have custom CSS)

- We can modify our theme and detect problems before pushing it live

- We can work offline (or on a terrible Internet connection!)

Taking a manual backup

As we created a separate data-only container for our data, we could take a manual backup by running:

docker run --rm --volumes-from data-coderunner.io -v ~/backup:/backup ubuntu tar cfz /backup/backup_$(date +%Y_%m_%d).tar.gz /var/lib/ghost

This would fire up a new container using the ubuntu image, mount our data container volumes, and then create a compressed and dated tarball of the entire /var/lib/ghost folder into the ~/backup folder mounted on our host. Nice.

Taking a file dump of the database in this way should only be done while it is shutdown or appropriately locked, or you risk data corruption

Once we have our tarball, we could restore it later with a similar method. This is okay, but we could do better.

We want to avoid having to shutdown our database for the backup, and we would be better off using dedicated tools to handle it. If you're running Ghost with sqlite there is the online backup API and for mysql/mariadb there is mysqldump. Also, it would be nice to have it automated.

For that purpose, I created ghost-backup, a separate container for managing backup and restore of Ghost.

Adding automated backup with ghost-backup

The ghost-backup image is published on Docker hub. We can use it by adding this to our docker-compose.yaml file:

# Ghost Backup

backup-blog-coderunner.io:

image: bennetimo/ghost-backup

container_name: "backup-blog-coderunner.io"

links:

- mariadb-coderunner.io:mysql

volumes_from:

- data-coderunner.io

This will create a ghost-backup container linked to our database container, taking a snapshot of our database and files every day at 3am and storing them in /backups.

The database link needs to be named

mysqlas shown, as this becomes the hostname that the container uses to communicate with the database

To change the defaults, for example changing the directory or backup schedule, you can customise the configuration

Because the Docker linking system exposes all of a source containers environment variables, the container can authenticate with mariadb without us having to configure anything.

Putting our backups in Dropbox

At the moment our backups are just being created on the Droplet. We should have a copy stored offsite to at least get us the '1' in a 3-2-1 backup strategy.

Seeing as we're dealing with a blog and not mission-critical data, one simple thing we can do is just push our backups to Dropbox, and there is a headless Linux client which makes this trivial.

We just need to set it up, and then change our backup location to use the Dropbox folder. Of course we're using Docker, so we should use a container for it! I put together a simple one in docker-dropbox.

We just need to add it to our docker-compose.yaml:

# Dropbox

dropbox:

image: bennetimo/docker-dropbox

container_name: "dropbox"

And then add the Dropbox container volume to our ghost-backup container:

volumes_from:

- data-coderunner.io

- dropbox

The first time you launch the container, you'll see a link in the logs that you need to visit to connect with your Dropbox account.

Finally, we tweak our ghost-backup config to use the Dropbox folder as it's location:

environment:

- BACKUP_LOCATION=/root/Dropbox/coderunner

And we're done. All our backups will now make there way to Dropbox.

By including the backup container in

docker-compose.yamlit will be part of our local and live setup. As we'll see below that's what we want, but it probably makes sense to disable automated backups locally indocker-compose.override.yaml

Restoring a backup

A backup is no use if we can't restore it, and we can do that with:

docker exec -it backup-blog-coderunner.io restore -i

This will present a choice of all the backup archives found and ask which to restore. Alternatively, we can restore by file or by date.

Manual sync

Essentially a sync is a snapshot of our local environment, restored onto our live environment. As we now have our ghost-backup container configured on both, we could:

- Take a manual backup on the local environment (we can use

docker exec backup-blog-coderunner.io backupfor that) scpthe created database and files archive to the Droplet- Restore the archives on the Droplet with

docker exec backup-blog-coderunner.io restore -f /path/to/file

For step 3 we would need to either use docker cp to put the archives into the ghost-backup container, or mount a directory from the host to the container for our restore archives

This approach would work, but it's a bit cumbersome and manual. With Dropbox setup we avoid step 2, but also have to check our sync folder until our files are ready for restore.

If our posts use a lot of images, our ghost files archive will also quickly become quite large to keep shipping around.

For simpler, 'one button' sync I created ghost-sync.

Using ghost-sync

ghost-sync uses rsync to transfer the images, so it's incremental, only copying accross anything new. For the database sync, it piggybacks off ghost-backup.

To set it up, we first need to add it to our docker-compose.override.yaml (as we only want it locally):

sync-blog-coderunner.io:

image: bennetimo/ghost-sync

container_name: "sync-blog-coderunner.io"

entrypoint: /bin/bash

environment:

- SYNC_HOST=<dropletip>

- SYNC_USER=<dropletuser>

- SYNC_LOCATION=<syncfolder>

volumes:

- ~/.ssh/<privatekey>:/root/.ssh/id_rsa:ro

- /var/run/docker.sock:/var/run/docker.sock:ro

volumes_from:

- backup-blog-coderunner.io

links:

- backup-blog-coderunner.io:backup

There's a few things going on here, so let's break it down.

We have overridden the entrypoint, which is the command that is run when the image is started, to prevent a sync happening when we up.

This issue tracks potential support for services that can be configured not to auto-start in Compose

We also mount an appropriate ssh private-key, and also set some environment variables so we can make a connection to the Droplet. The syncfolder is where ghost-sync will rsync all of the images to.

Finally for the database sync we need to be able to talk to the ghost-backup container, so we add it as a link and a volume, and mount the docker socket.

Now we just need to make two small additions in docker-compose.live.yaml:

data-coderunner.io:

volumes:

- /sync/coderunner.io/images:/var/lib/ghost/images

backup-blog-coderunner.io:

volumes:

- /sync/coderunner.io:/sync/coderunner.io:ro

We mount syncfolder/images as the Ghost images folder in our data-only container, so we can rsync directly to it. And we mount the syncfolder again in the backup container, so that we'll be able to initate a restore of our database archive from there.

ghost-sync can also sync the themes and apps folders with the -t and -a flags

At this point we have a way to sync between our environments, let's test it out!

Testing the Workflow

If you have followed everything up to this point, then you now have everything in place to enable our desired workflow.

- Create content at http://coderunner.io.dev/ghost

- Once happy, run

docker-compose run --rm sync-blog-coderunner.io sync -idto push the content live by syncing the database and images - View the content on http://coderunner.io

And we're done!

Wrapping Up

Now that you have a nice new Ghost blog setup, here's a few other things you might want to explore.

- Customising your theme

- The default Casper theme is a nice starting point, but there are loads of great free (and paid) themes available at places like Ghost Marketplace, All Ghost Themes or Theme Forest. I have another little container ghost-themer which might be useful for trying some out.

- Adding Google Analytics

- Adding comments

- Adding other blogs/services; our modular Dockerised setup means we can setup other things behind our reverse proxy nice and simply. Of course you might need to upgrade to a more powerful Droplet :)

If you have any questions or suggestions about anything then feel free to leave a comment below :)